How can you measure the real impact of your training?

Return on investment. In the world of learning and development, it is the gold standard for evaluating training initiatives. But how can you measure the real impact of your training? How do you know the learning is ‘sticky’ – making it out of the boardroom and into mahi?

In this article we take a ‘behind the scenes’ look at how Upskills makes sure the learning is impactful and transferable.

Great design

It all starts with great design. What is the issue we’re looking to solve through training? Who are the stakeholders? Where are current levels of skill sitting? Getting clear about this informs our course content design right from the start.

The neuroscience of adult learning tells us that for learning to be effective, it needs to be many things, including:

-

- Relevant and fit for purpose, strengths-based and engaging

- Designed to create building blocks

- Memorable – through fun, novel ideas, team building, emotions and the power of storytelling

- Provide opportunities to practice, and nudge participants to use new learning

- Have spaced repetition of new learning

- Follow-up down the track to build in transfer

- Form connections, by including ako and mentoring in the mix.

What you measure, counts

We’ve been working alongside our clients for over ten years, refining metrics for success, tracking them, and reporting on them.

Using a range of measures, including data you might already be collecting as an organisation such as career trajectory, promotions, attendance at work and retention rates is a concrete starting point.

Here are some examples where Upskills data shows ROI.

39.5% of graduates on an Upskills programme with this client stepped up to further career opportunities (promotion, secondment, selection for further training)

Staff turnover amongst programme participants was 12% lower than the whole-of-site figure: engaging in the programme improved staff retention

Engagement time on this client’s Learning Management System for in- house online learning showed it engaged over 50% of learners

Cycle of evaluation

Who better to ask about their learning gains than the participants themselves? Selecting from a range of measures, self-reporting is one way to paint a complete picture of the learning wins. Evaluation is built into all Upskills programmes at different points in the journey including the classic Net Promoter question at our end evaluation stage.

Here are a few gold nuggets from our data collection.

Post course, 90% of this group reported that they read and understand more workplace material and 70% speak up more at meetings.

100% of this Emerging Leader cohort said their confidence in their own leadership had increased.

95% of this Digital Skills cohort were more confident using MS Office suite – Outlook, Teams, Word & Excel

We also ask supervisors and managers about the improvements they see in their people as part of this process.

100% of supervisor respondents strongly agreed they had seen greater engagement by staff to use technology effectively

100% of supervisors strongly agreed that participants’ verbal feedback and participation had improved

All managers reported that they had seen greater understanding and use of Standard Operating Procedures amongst the participant groups.

Net Promoter

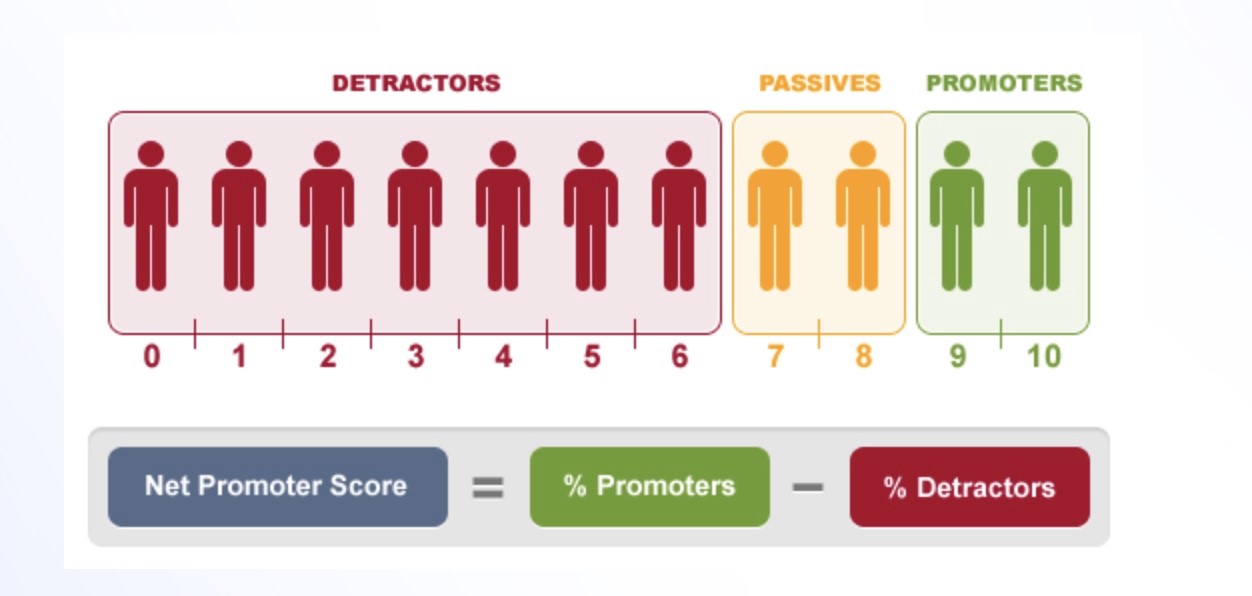

In what sometimes feels like a tough measure, the Net Promoter question asks clients how likely they would be to recommend our service to a colleague or friend on a 1 to 10 scale. Net Promoter methodology holds that scores of 1-6 are detractors, 7 and 8 are passives, and 9s and 10s are promoters.

With possible scores ranging from -100 to 100, any score above 0 is considered good.

-

- Above 20 is favourable,

- Above 50 is excellent, and

- Above 80 is world-class*

(*Source: Adam Bunker, Qualtrics XM: What is NPS? Your Ultimate Guide to Net Promoter Score)

At Upskills, we track our Net Promoter on a rolling basis to ensure we have a large enough data set to be meaningful. By June 2024, across all Upskills programmes (workplace literacy and numeracy, leadership, Train the Trainer, Project Ikuna and customised training) we are sitting at an NPS score of 60 (data set = 461).

By way of comparison, benchmarks in New Zealand for tertiary training education sit at 19 with education overall on a NPS of 30.

Continuous improvement drives cost-saving and efficiency

Our workplace literacy programmes and leadership courses empower participants to create a continuous improvement project – here are a few of the projects that have come out of this work which show a clear return on investment:

-

- One manufacturer reduced waste on one of their product lines by 90% with a zero-cost innovation designed by fabricating dividers on a factory machine.

- Another manufacturer saved $91,000 over two years by introducing more environmentally sustainable machine covers (as opposed to disposable single-use plastic covers) when cleaning.

- One of our emerging leaders pitched the idea of an ice machine to cool product instead of buying ice externally – a cost saving of a staggering $156, 000 annually.

When you engage operational staff in problem-solving, the benefits to productivity and efficiency are tangible because you engage in embracing diverse ideas from people who are experts in their area.

Those are just some of the tools we use to measure ourselves and keep improving. If you’re keen on getting a better return on your investment in staff training, get in touch for some ideas. Phone us on 09 622 3979 or complete the form below and one of our team will get in touch.

Read more

About return on investment in workplace training